AI is already routine in German businesses – since February 2025 compliance is mandatory. Most companies focus on use cases and technology evaluation, the EU AI Act brings regulatory risks into sharp focus. With penalties of up to 7% of global annual turnover for non-compliance, AI governance is now an elementary necessity for executives.

EU AI Act 2025:

What Businesses Need to Know Now

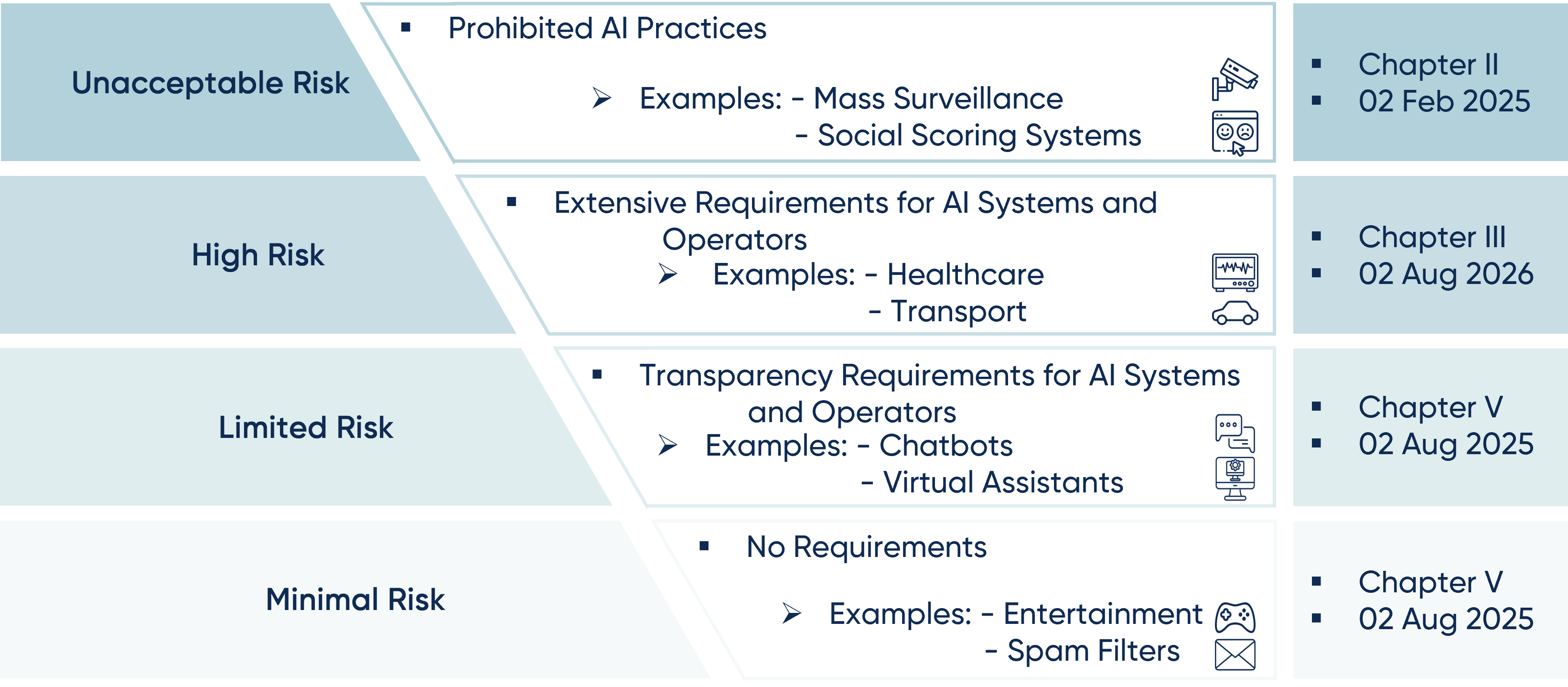

The EU’s risk-based approach classifies AI systems into four categories – from unacceptable risk (prohibited) to minimal risk. For high-risk AI (e.g., as a risk for health, safety and security or fundamental rights of citizens), the following apply from 2025:

- Transparency obligations (documentation of data sources & decision logic)

- Technical robustness (cybersecurity, fault tolerance)

- Human oversight (ability to override AI decisions)

- Conformity assessments by certified bodies

The EU AI Act primarily aims to classify and manage risks associated with AI. Businesses are now legally required to deploy AI technologies safely, ethically, and responsibly.

![[Translate to English:]](/fileadmin/_processed_/2/a/csm_2024-04-19-PORTRAET-Friedich-Jochen_939a81a19c.jpg)